Project: Web-to-VR and accessibility

Role: Project Manager & UX Designer

In 2021, I led a small project exploring accessibility in VR. We created a web app that transformed any WordPress site into a VR-viewable environment, testing VR accessibility, its possibilities and limits.

Role: Project Manager & UX Designer

In 2021, I led a small project exploring accessibility in VR. We created a web app that transformed any WordPress site into a VR-viewable environment, testing VR accessibility, its possibilities and limits.

My role

As project manager and interaction designer, I planned, structured, and defined interactions for the VR experience. My colleague handled the technical implementation using React and Three.js.

As project manager and interaction designer, I planned, structured, and defined interactions for the VR experience. My colleague handled the technical implementation using React and Three.js.

Design and development

We built a web app that could take any URL, automatically detect if it was a WordPress site, and then use the WordPress API to load its content into a React-based VR environment. The app transformed website assets—text, images, and media—into interactive elements within a 3D landscape. Additionally, we developed a WordPress plugin allowing a hypothetical user to upload custom 3D objects and panoramic images, enabling personalized and engaging VR experiences.

We built a web app that could take any URL, automatically detect if it was a WordPress site, and then use the WordPress API to load its content into a React-based VR environment. The app transformed website assets—text, images, and media—into interactive elements within a 3D landscape. Additionally, we developed a WordPress plugin allowing a hypothetical user to upload custom 3D objects and panoramic images, enabling personalized and engaging VR experiences.

Accessibility exploration

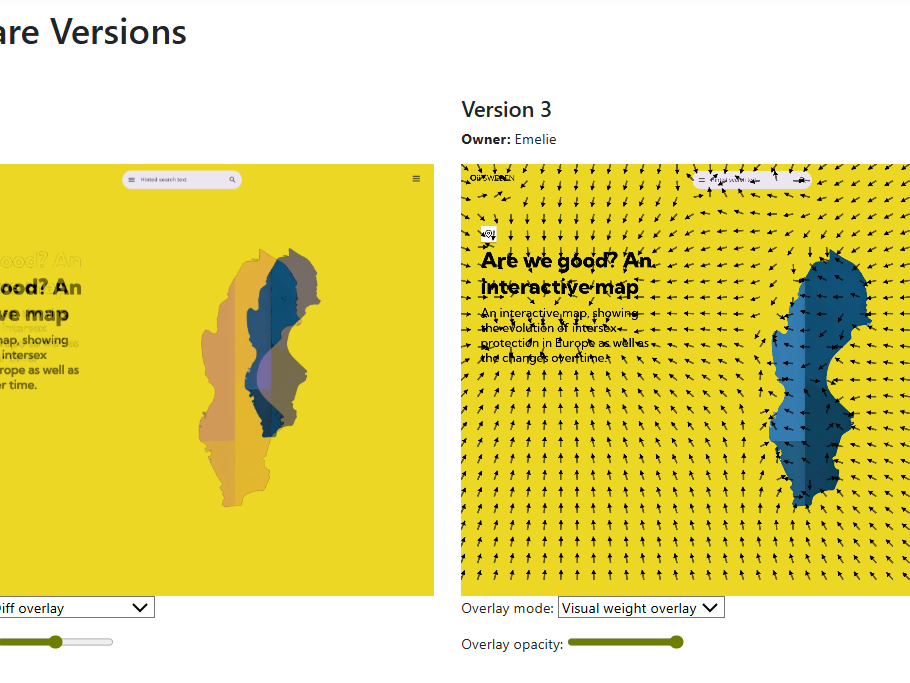

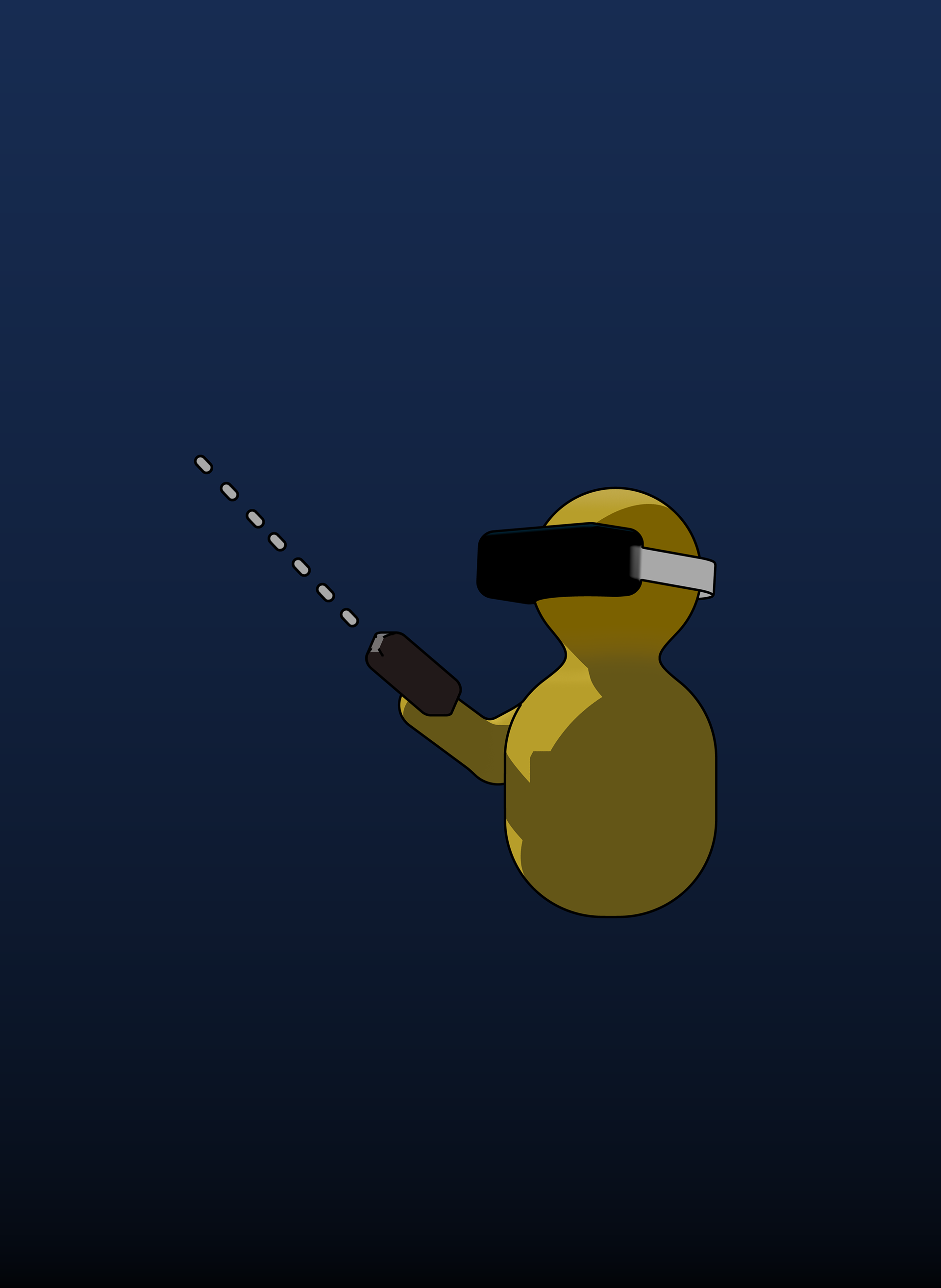

To deeply explore accessibility in VR, we developed and tested our own WebVR text-to-speech plugin. This became the core focus of our project—understanding how virtual reality could align with existing accessibility guidelines (such as WCAG) and identifying where those standards might fall short. We specifically created a "point-to-speak" interaction, allowing users in VR to intuitively select text simply by looking at it and activating speech playback.

To deeply explore accessibility in VR, we developed and tested our own WebVR text-to-speech plugin. This became the core focus of our project—understanding how virtual reality could align with existing accessibility guidelines (such as WCAG) and identifying where those standards might fall short. We specifically created a "point-to-speak" interaction, allowing users in VR to intuitively select text simply by looking at it and activating speech playback.

Throughout this process, we continually returned to WCAG principles, exploring how established guidelines could be translated into immersive environments. Our goal was not only to ensure basic accessibility compliance but also to experiment with new ideas that could shape the future of inclusive VR design.

OUTCOMES

The final result was a working prototype demonstrating the potential for accessible VR interactions, laying the groundwork for future research. This project has since inspired my ongoing exploration of accessibility in immersive environments. I'm currently continuing this work, developing new approaches that build upon these initial findings, with the goal of publishing further insights in the near future.

The final result was a working prototype demonstrating the potential for accessible VR interactions, laying the groundwork for future research. This project has since inspired my ongoing exploration of accessibility in immersive environments. I'm currently continuing this work, developing new approaches that build upon these initial findings, with the goal of publishing further insights in the near future.